February 26, 2026

Eclipse Foundation unveils full agenda for OCX 2026

by Natalia Loungou at February 26, 2026 12:00 PM

BRUSSELS - February 26, 2026 - The Eclipse Foundation, one of the world’s largest open source software organisations, has announced the full agenda for Open Community Experience (OCX 2026), taking place 21–23 April 2026 at The EGG conference centre in Brussels, Belgium.

OCX is the Foundation’s flagship conference and one of Europe’s leading gatherings for open source professionals, featuring nearly 150 sessions across six thematic tracks and five collocated communities. Over three dynamic days, developers, architects, researchers, and industry leaders will dive into the technologies, standards, and collaboration models shaping the next era of open innovation.

Following a successful debut in 2024, OCX 2026 returns with a significantly expanded technical program, growing from three to five collocated communities and increasing session volume by more than 35 percent. Each community offers deep domain expertise while connecting attendees to the broader open source ecosystem.

“Like the Eclipse Foundation itself, OCX 2026 continues to grow in scope, diversity, and depth,” said Mike Milinkovich, executive director of the Eclipse Foundation. “As AI reshapes software development, software-defined vehicles transform mobility, and new regulations redefine accountability, open collaboration has never been more essential. This year’s agenda reflects the real challenges and real opportunities facing our community.”

What developers will find at OCX 2026

OCX 2026 delivers three days of practical engineering insight, strategic perspective, and meaningful community-driven collaboration.

Main OCX program: Cross-domain innovation in action

The core program brings together leading practitioners to address software security, enterprise Java evolution, embedded and edge computing, cloud-native architectures, governance, and cross-industry innovation. Sessions are grounded in real-world implementation and built for professionals shipping and scaling software. Attendees leave with actionable techniques, architectural insight, and proven approaches they can apply immediately.

Five collocated events within a single conference experience

OCX 2026 features five collocated events within the conference, each offering a concentrated experience for specific technical communities while remaining fully accessible with a single pass.

- Open Community for Tooling

Formerly EclipseCon, this event continues the legacy of one of the industry’s most respected developer tooling communities. Topics include AI-assisted IDEs, modeling frameworks, language servers, next-generation workflows, and productivity engineering. If you build tools or depend on them to deliver high-performance software, this community is essential.

Speaker Highlights:

- Programming in Every Language: Building Cultural Tools with Langium - Malik Lanlokun

- AI in Action: The Ultimate Live Demo with Theia AI - Jonas Helming

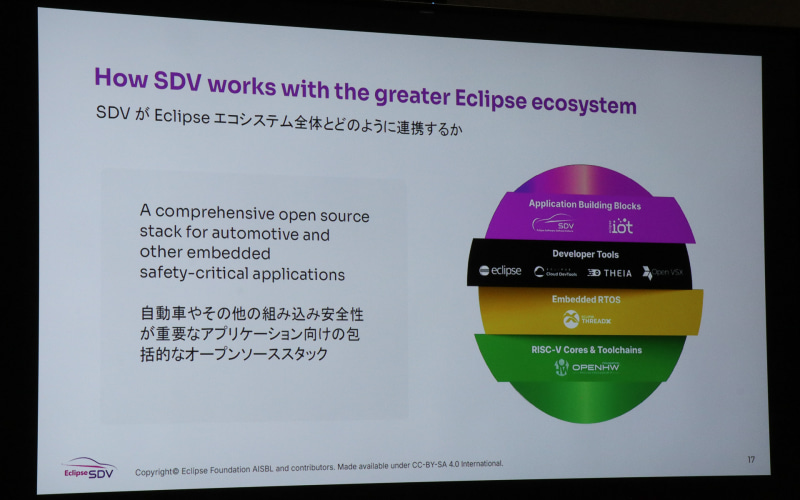

- Open Community for Automotive

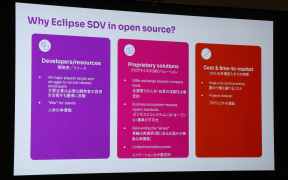

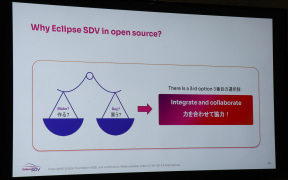

Open collaboration is accelerating the shift to software-defined vehicles. This event explores open standards, safety-conscious architectures, and real-world implementations. Engineers and platform architects will gain practical insight into the software foundations powering connected mobility.

Speaker Highlights:

- Diagnostics Reimagined: How Eclipse OpenSOVD Powers Open Collaboration and Standard Evolution - Thilo Schmitt & Alexander Mohr

- Fifty Shades of SDV: A Blueprint-Driven Roadmap for Orchestration Adoption - Naci Dai & Oliver Kral

- Open Community for AI

Open source is redefining how AI systems are built, validated, and governed. This event examines trustworthy AI frameworks, open model ecosystems, responsible governance strategies, data sharing and dataspaces, and production deployment lessons. Developers building production-grade AI systems will find both technical depth and forward-looking guidance.

Speaker Highlights:

- Commit to Quality: AI-Enhanced Testing in Open Source - Shelley Lambert & Longyu Zhang

- Understanding Machine Decisions - Haishi Bai

-

Open Community for Compliance

With regulations such as the EU Cyber Resilience Act (CRA) raising expectations for secure software, compliance is now a core engineering concern. This event equips teams with practical strategies for security, licensing, and regulatory alignment without slowing innovation. If you deliver software into the European market, this content is critical.

Speaker Highlights:

- Taming the SBOM Chaos – A Legal Compass for the CRA and Open Source Compliance - Hendrik Schöttle

- Layered Compliance: Using the Swiss Cheese Model to Prevent Catastrophic Failure - Georg Link

- Open Community for Research

Presented in collaboration with the Apereo Foundation and academic partners, this event demonstrates how open source accelerates the path from research to scalable, real-world systems. Topics include reproducibility, open science practices, and production-ready implementations bridging academia and industry.

Speaker Highlights:

- VOStack open-source Software Stack for the virtualization of IoT devices - Anastasios Zafeiropoulos

- UniTime Overview: From Research to Practice - Tomas Muller

Featured keynote speakers

OCX 2026 brings together perspectives from high-performance sport, European digital policy, and global open source leadership.

- Ruth Buscombe - Formula 1 race strategist and F1TV analyst, Buscombe opens the conference with “The Winning Formula: What F1 Teaches Us About Marginal Gains, Teamwork, and Data-Driven Decision Making.” Her keynote connects the precision of elite motorsport with the collaborative performance culture of open source communities.

- Rolf Riemenschneider - Head of Sector IoT at the European Commission, Riemenschneider leads research and innovation under Horizon Europe, coordinating strategy for Cloud-Edge Computing and the Internet of Things. His keynote will explore Europe’s digital trajectory and the role of open technologies in strategic data spaces.

- Mike Milinkovich - Executive Director of the Eclipse Foundation since 2004, Milinkovich is a long-standing open source leader who has served on the boards of the Open Source Initiative, the OpenJDK community, and the Executive Committee of the Java Community Process. He will share insight on the evolving role of open collaboration in a rapidly shifting technology landscape.

Register now and be part of what’s next

OCX 2026 is built for developers, architects, researchers, and decision-makers driving modern software forward. One registration unlocks the entire experience, including the main program and all five co-located events.

- Review the agenda

- Plan your sessions

- Secure your place at OCX 2026 and register now.

Discounted registration rates are available until March 16. Capacity at The EGG conference centre is limited, and early registration is recommended.

Thanks to our sponsors

OCX 2026 is made possible through the generous support of our sponsors, including SAP, TypeFox, EclipseSource, Azul, Obeo, Equo Tech, ETAS, Eurotech, LG, Red Hat, KentYou, OSGi, and Mercedes-Benz Tech Innovation GmbH. Their leadership and commitment to open source innovation help drive collaboration across industries and strengthen the global open source ecosystem. We sincerely thank all of our sponsors for their partnership and commitment.

Organisations interested in supporting OCX 2026 and engaging with the global open source ecosystem are invited to contact sponsors@OCXconf.org to request sponsorship information and review the sponsorship prospectus.

About the Eclipse Foundation

The Eclipse Foundation provides a global community of individuals and organisations with a vendor-neutral, business-friendly environment for open source collaboration and innovation. We host Adoptium, the Eclipse IDE, Jakarta EE, Open VSX, Software Defined Vehicle, and more than 400 high-impact open source projects. Headquartered in Brussels, Belgium, we are an international non-profit association supported by over 300 members. Our events, including Open Community Experience (OCX), bring together developers, industry leaders, and researchers from around the world. To learn more, follow us on X and LinkedIn, or visit eclipse.org.

Media contacts:

Schwartz Public Relations (Germany)

Julia Rauch/Marita Bäumer

Sendlinger Straße 42A

80331 Munich

EclipseFoundation@schwartzpr.de

+49 (89) 211 871 -70/ -62

514 Media Ltd (France, Italy, Spain)

Benoit Simoneau

M: +44 (0) 7891 920 370

Nichols Communications (Global Press Contact)

Jay Nichols

+1 408-772-1551

AI Voice and Interaction Agents in Production: 6 Lessons from the Field

by Jonas, Maximilian & Philip at February 26, 2026 12:00 AM

If you know EclipseSource, you probably know us for developer tools, IDEs, and technical AI topics. What you might not know is that we’ve been building AI voice agents for production use since before …

The post AI Voice and Interaction Agents in Production: 6 Lessons from the Field appeared first on EclipseSource.

February 25, 2026

Hardening the Open VSX Registry: Keeping it reliable at scale

by Denis Roy at February 25, 2026 02:45 PM

Denis Roy, Head of Information Technology, Eclipse Foundation

As the Open VSX ecosystem continues to grow, keeping the registry stable is a top priority. Behind the scenes, we are strengthening the infrastructure so that even during peak loads or major provider outages, developer workflows remain uninterrupted.

In recent posts, we shared how the Open VSX Registry is strengthening supply-chain security with pre-publish checks and introducing operational guardrails through rate limiting to scale responsibly. As adoption and usage increase, the underlying infrastructure behind those improvements becomes just as important. This post focuses on that work: improving availability, reducing single points of failure, and making recovery faster and more predictable when incidents occur.

A hybrid, fail-safe architecture

We are currently transitioning to a hybrid infrastructure model, moving core services to AWS as our primary environment, while keeping our on-premise infrastructure fully operational as a secondary site. Our primary AWS deployment is hosted in Europe, with our on-premises secondary environment located in Canada, reinforcing both operational resilience and regional diversity.

This is deliberate architectural diversity. AWS provides scale and flexibility. Our on-premise environment provides an independent fallback. If a cloud region experiences an outage, services can shift to infrastructure under our direct control.

The objective is simple: keep the registry online even when part of the underlying environment is not.

High-availability storage

Compute alone does not keep a registry running. The data must be available wherever the service is active.

As part of our infrastructure improvement plan, we are adding a dedicated fallback storage cluster and synchronizing extension binaries and metadata across locations. This reduces reliance on any single storage layer and prevents situations where one environment is healthy but lacks the data it needs.

If one storage layer becomes unreachable, the other is ready to step in.

Seeing issues before they become outages

Reducing downtime starts with visibility.

We are modernizing our observability stack across both cloud and on-prem environments, strengthening monitoring, centralized logging, and real-time alerting. This makes it easier to detect slowdowns, rising error rates, or unusual traffic patterns before they impact users.

Earlier detection leads to faster resolution and fewer user-visible incidents.

Faster recovery through clearer process

Technology improves reliability. Process makes it consistent.

We are formalizing incident response and recovery procedures for our multi-site architecture. Updated runbooks and rehearsed failover scenarios reduce mean time to recovery and remove uncertainty during high-pressure events.

When something does go wrong, clarity and speed make all the difference.

Why this work matters

The Open VSX Registry now supports a rapidly expanding ecosystem of developer platforms, CI systems, and AI-enabled tools. Growth brings higher expectations for uptime and reliability.

These infrastructure improvements are a long-term investment in keeping the Open VSX Registry stable, secure, and dependable as it scales.

Security builds trust. Operational guardrails support sustainability. Infrastructure upgrades ensure the service remains available when it matters most.

The Open VSX Registry is shared public infrastructure. Keeping it reliable requires continuous investment, thoughtful architecture, and disciplined operations. This work strengthens the registry so developers, publishers, and platform providers can rely on it with confidence, today and as the ecosystem continues to evolve.

It’s a team effort

This work reflects the effort of many people across the Eclipse Foundation and the broader Open VSX community. From the IT teams to Software Development, Security and beyond, including our community of users, developers, testers and integrators, all have contributed to making Open VSX a world‑class, high‑value extension registry that continues to grow through focused stewardship, open collaboration, and a commitment to empowering developers everywhere.

We also appreciate the collaboration of our cloud and infrastructure partners who continue to support the reliability and performance of the Open VSX Registry.

February 18, 2026

Eclipse RCP Book - Fourth Edition

by Lars at February 18, 2026 12:00 AM

I’m happy to announce the release of the fourth edition of the Eclipse RCP book, updated for Eclipse 2025-12.

Eclipse Rich Client Platform (RCP) powers some of the world’s most sophisticated desktop applications. This comprehensive guide takes you from first principles to professional application development, combining crystal-clear explanations with hands-on exercises that build a complete working application.

You’ll learn:

- Building modern UIs with SWT, JFace, and CSS styling

- OSGi modularity, dependency injection, and platform services

- Command-line builds with Maven and Tycho

- Migrating legacy Eclipse 3.x applications

Whether you’re building your first Eclipse application or modernizing existing systems, this book provides the complete roadmap. Each chapter pairs thorough explanations with detailed exercises.

This fourth edition is fully updated for Eclipse 2025-12 and reflects real-world best practices from hundreds of training sessions and production projects.

Get the book on Amazon:

February 16, 2026

What if the add and remove methods in java.util.Collection had fluent counterparts?

by Donald Raab at February 16, 2026 08:21 PM

Eclipse Collections has complements to add/remove that can be chained.

Photo by Rickard Olsson on Unsplash

Photo by Rickard Olsson on UnsplashThe truth behind add and remove

The add and remove methods on java.util.Collection return boolean. The boolean return value is useful for Set types for add, but not very useful for List types. The method add will return whether an element is added to the Collection type, which is always true for a List, but not necessarily for a Set. The method remove will return whether an element is removed from a Collection type, which will behave similarly for a Set and List.

One of the downsides of add and remove returning boolean is that they can’t be chained, and result in the need for multiple statements or static factory methods like of() on List, Set, and Map.

This is a truth that Java developers have dealt with for 28 years. So while we can’t chain add and remove methods in Java alone, Eclipse Collections provides alternatives named with and without that make chaining possible.

The fluent methods with and without

The with method in the Eclipse Collections MutableCollection interface adds the element passed as a parameter to the underlying collection, and returns the same collection. The without method in MutableCollection removes the element passed as a parameter, and also returns the same collection. Both methods mutate the underlying collection, they do not return copies of the collection.

The methods with and without are covariant. This means on MutableCollection, they return MutableCollection. On MutableList, they return MutableList. On MutableSet, they return MutableSet. Etc.

Here’s an example that compares the difference between using add and with on a java.util.List and MutableList.

@Test

public void addListVsWithMutableList()

{

var expected = Lists.immutable.of("Mary", "Ted", "Sally");

List<String> jdkList = new ArrayList<>();

jdkList.add("Mary");

jdkList.add("Ted");

jdkList.add("Sally");

Assertions.assertEquals(expected, jdkList);

MutableList<String> ecMutableList = Lists.mutable.empty();

ecMutableList.with("Mary").with("Ted").with("Sally");

Assertions.assertEquals(expected, ecMutableList);

}

Here’s an example that compares the difference between using remove and without on a java.util.List and MutableList.

@Test

public void removeListVsWithoutMutableList()

{

var expected = Lists.immutable.of("Mary", "Sally");

List<String> jdkList = new ArrayList<>();

jdkList.add("Mary");

jdkList.add("Ted");

jdkList.add("Sally");

jdkList.remove("Ted");

Assertions.assertEquals(expected, jdkList);

MutableList<String> ecMutableList = Lists.mutable.empty();

ecMutableList.with("Mary").with("Ted").with("Sally").without("Ted");

Assertions.assertEquals(expected, ecMutableList);

}

There are fluent equivalents to addAll and removeAll in Eclipse Collections MutableCollection interface named withAll and withoutAll.

The definitions of with, without, withAll, and withoutAll on MutableCollection are straightforward. You will notice a very subtle difference between addAll and withAll, and removeAll and withoutAll.

// Collection interface

boolean add(E e);

boolean remove(Object o);

boolean addAll(Collection<? extends E> c);

boolean removeAll(Collection<?> c);

// MutableCollection interface

MutableCollection<T> with(T element);

MutableCollection<T> without(T element);

MutableCollection<T> withAll(Iterable<? extends T> elements);

MutableCollection<T> withoutAll(Iterable<? extends T> elements);

The methods withAll and withoutAll take Iterable instead of Collection as a parameter, which makes them more useful with additional types. For instance, you can even use them with Stream, by using a little trick as shown in the following example.

@Test

public void addAllListVsWithAllMutableList()

{

Supplier<Stream<String>> one = () -> Stream.of("a", "b", "c");

Supplier<Stream<String>> two = () -> Stream.of("d", "e", "f");

Supplier<Stream<String>> three = () -> Stream.of("g", "h", "i");

var expected = List.of("a", "b", "c", "d", "e", "f", "g", "h", "i");

List<String> jdkList = new ArrayList<>();

jdkList.addAll(one.get().toList());

jdkList.addAll(two.get().toList());

jdkList.addAll(three.get().toList());

Assertions.assertEquals(expected, jdkList);

MutableList<String> ecMutableList = Lists.mutable.<String>empty()

.withAll(one.get()::iterator)

.withAll(two.get()::iterator)

.withAll(three.get()::iterator);

Assertions.assertEquals(expected, ecMutableList);

}

In the withAll examples, the Stream instances are not copied into a List before being added to the target List. Instead, the Stream is converted into an Iterable by using a method reference to the Stream::iterator method.

Additional Resources

If you would like to see more examples of with, without, withAll, and withoutAll, there are a couple blogs I would recommend. I wrote the following blog seven years ago, and it is as applicable today as it was back then.

As a matter of Factory — Part 3 (Method Chaining)

If we go back a couple years more, I wrote a blog that explains the choice of with and without as preferred prepositions to the method named of. Each person has their preposition preference, but of does not have a natural opposite like with.

I hope you found this short reference for the fluent with, without, withAll and withoutAll methods in Eclipse Collections MutableCollection interface helpful. There are more exhaustive and explanatory references in the blogs above if you are curious and want to learn more.

Thanks for reading!

I am the creator of and committer for the Eclipse Collections OSS project, which is managed at the Eclipse Foundation. Eclipse Collections is open for contributions. I am the author of the book, Eclipse Collections Categorically: Level up your programming game.

February 12, 2026

Eclipse Theia 1.68 Release: News and Noteworthy

by Jonas, Maximilian & Philip at February 12, 2026 12:00 AM

We are happy to announce the Eclipse Theia 1.68 release! The release contains in total 75 merged pull requests. In this article, we will highlight some selected improvements and provide an overview of …

The post Eclipse Theia 1.68 Release: News and Noteworthy appeared first on EclipseSource.

February 11, 2026

Scaling the Open VSX Registry responsibly with rate limiting

February 11, 2026 08:05 PM

The Open VSX Registry has become widely used infrastructure for modern developer tools. That growth reflects strong trust from the ecosystem, and it brings a shared responsibility to keep the Registry reliable, predictable, and equitable for everyone who depends on it.

In a previous post, I shared an update on strengthening supply-chain security in the Open VSX Registry, including the introduction of pre-publish checks for extensions. This post focuses on the operational side of the same goal: ensuring the Registry remains resilient and sustainable as usage continues to grow.

The Open VSX Registry is free to use, but not free to operate

Operating a global extension registry requires sustained investment in:

- Compute and storage to serve and index extensions at scale

- Bandwidth to deliver downloads and metadata worldwide

- Security to protect users, publishers, and the service itself

- Staff to operate, monitor, secure, and support the Registry

These costs scale directly with usage.

AI-driven usage is scaling faster than ever

Demand on the Open VSX Registry is increasing rapidly, and AI-enabled development is accelerating that trend. A single developer can now orchestrate dozens of agents and automated workflows, generating traffic that previously would have required entire teams. In practical terms, that can mean the equivalent load of twenty or more traditional users, with direct impact on compute, bandwidth, storage, security capacity, and operational oversight.

This is not unique to the Open VSX Registry. It is an industry-wide challenge. Stewards of public package registries such as Maven Central, PyPI, crates.io, and Packagist have recently raised the same sustainability concerns in a joint statement on sustainable stewardship. Mike Milinkovich, Executive Director of the Eclipse Foundation, echoed that message in his post on aligning responsibility with usage.

As reliance on shared open infrastructure grows, sustaining it becomes a collective responsibility across the ecosystem.

Open VSX is critical, and often invisible, infrastructure

Many developers and organisations may not realise how often they rely on the Open VSX Registry. It provides the extension infrastructure behind a growing number of modern developer platforms and tools, including Amazon’s Kiro, Cursor, Google Antigravity, Windsurf, VSCodium, IBM’s Project Bob, Trae, Ona (formerly Gitpod), and others.

If you use one of these tools, you use the Open VSX Registry.

The Open VSX Registry remains a neutral, vendor-independent public service, operated in the open and governed by the Eclipse Foundation for the benefit of the entire ecosystem.

For developers, the expectation is simple: Open VSX should remain fast, stable, secure, and dependable as the ecosystem grows.

As more platforms and automated systems rely on the Registry, continuous machine-driven traffic can place sustained load on shared infrastructure. Without clear operational guardrails, that can affect performance and availability for everyone.

A practical step for sustainable and reliable operations

Usage has shifted from primarily human-driven access to continuous automation driven by CI systems, cloud-based tooling, and AI-enabled workflows. That shift requires operational controls that scale predictably.

Rate limiting provides a structured way to manage high-volume automated traffic while preserving the performance developers expect. It also ensures that operational decisions are based on real usage patterns and that expectations for large-scale consumption are clear and transparent.

Rate limits aren’t entirely new. Like most public infrastructure services, the Open VSX Registry has long had baseline protections in place to prevent sustained high-volume usage from degrading performance for everyone. What’s changing now is that we’re moving from a one-size-fits-all approach to defined tiers that more accurately reflect different usage patterns. This allows us to keep the Registry stable and responsive for developers and open source projects, while providing a clear, supported path for sustained at-scale consumption.

For individual developers and open source projects, day-to-day workflows remain unchanged. Publishing extensions, searching the registry, and installing tools will continue to work as they always have for typical usage.

A measured, transparent rollout

Rate limiting will be introduced incrementally, with an emphasis on platform health and operational stability.

The initial phase focuses on visibility and observation before any limits are adjusted. This includes improved insight into traffic patterns for registered consumers, baseline protections for anonymous high-volume usage, and a monitoring period before any limits are adjusted.

This work is being done in the open so the community can follow what is changing and why. Progress and discussion are tracked publicly in the Open VSX deployment issue:

https://github.com/EclipseFdn/open-vsx.org/issues/5970

What this means for the community

The goal is to keep the Open VSX Registry reliable and fair as it scales, while minimizing impact on normal use.

For most users, nothing should feel different. Developers should see little to no impact, and publishers should not experience disruption to normal publishing workflows. Sustained, high-volume automated consumers may need to coordinate with the Registry to ensure their usage can be supported reliably over time.

Organisations that depend on the Open VSX Registry for sustained or commercial-scale usage are encouraged to get in touch. Coordinating early helps us plan capacity, maintain reliability, and support the broader ecosystem. Please contact the Open VSX Registry team at infrastructure@eclipse-foundation.org.

The intent is not restriction, but clarity in support of fairness, stability, and long-term sustainability.

Looking ahead

Automation is reshaping how developer infrastructure is consumed. Responsible rate limiting is one step toward ensuring the Open VSX Registry can continue to serve the ecosystem reliably as those patterns evolve.

We will continue to adapt based on real-world usage and community input, with the goal of keeping the Open VSX Registry a dependable shared resource for the long term.

February 10, 2026

Eclipse JKube 1.19 is now available!

February 10, 2026 02:00 PM

On behalf of the Eclipse JKube

team and everyone who has contributed, I'm happy to announce that Eclipse JKube 1.19.0 has been

released and is now available from

Maven Central 🎉.

Thanks to all of you who have contributed with issue reports, pull requests, feedback, and spreading the word with blogs, videos, comments, and so on. We really appreciate your help, keep it up!

What's new?

Without further ado, let's have a look at the most significant updates:

- Improved Spring Boot health probes configuration

- ECR registry authentication with AWS SDK v2

- IngressClassName support for Ingress resources

- Updated base images from UBI 8 to UBI 9

- Reduced dependencies (Guava removal)

- 🐛 Many other bug-fixes and minor improvements

Improved Spring Boot health probes configuration

This release brings several improvements for the Spring Boot health probes configuration:

- JKube now uses the correct

management.endpoint.health.probes.enabledproperty. The previous property (management.health.probes.enabled) was deprecated in Spring Boot 2.3.2. - Added support for

server.ssl.enabledandmanagement.server.ssl.enabledproperties to enable liveness/readiness probes for Spring Boot Actuator. This allows for easier environment-specific SSL configuration.

ECR registry authentication with AWS SDK v2

JKube now supports Amazon ECR registry authentication using AWS SDK Java v2. This update ensures compatibility with the latest AWS SDK and provides a more robust authentication mechanism when pushing images to Amazon Elastic Container Registry.

IngressClassName support for Ingress resources

The IngressClassName field is now supported in the NetworkingV1IngressGenerator.

This is essential for Kubernetes environments with multiple ingress controllers, allowing you to specify which ingress controller should handle your Ingress resources.

Using this release

If your project is based on Maven, you just need to add the Kubernetes Maven plugin or the OpenShift Maven plugin to your plugin dependencies:

<plugin>

<groupId>org.eclipse.jkube</groupId>

<artifactId>kubernetes-maven-plugin</artifactId>

<version>1.19.0</version>

</plugin>If your project is based on Gradle, you just need to add the Kubernetes Gradle plugin or the OpenShift Gradle plugin to your plugin dependencies:

plugins {

id 'org.eclipse.jkube.kubernetes' version '1.19.0'

}How can you help?

If you're interested in helping out and are a first-time contributor, check out the "first-timers-only" tag in the issue repository. We've tagged extremely easy issues so that you can get started contributing to Open Source and the Eclipse organization.

If you are a more experienced developer or have already contributed to JKube, check the "help wanted" tag.

We're also excited to read articles and posts mentioning our project and sharing the user experience. Feedback is the only way to improve.

Project Page | GitHub | Issues | Gitter | Mailing list | Stack Overflow

Invisible Blockers for AI Coding - Our Workflow

by Jonas, Maximilian & Philip at February 10, 2026 12:00 AM

Everyone talks about context engineering and about writing the perfect prompt. But where AI coding often gets messy is right after we run that first prompt and the LLM generated a supposed solution. …

The post Invisible Blockers for AI Coding - Our Workflow appeared first on EclipseSource.

February 05, 2026

We’re hiring: improving the services that support a global open source community

February 05, 2026 07:02 PM

The Eclipse Foundation supports a global open source community by providing trusted platforms, services, and governance. As a vendor-neutral organisation, we operate infrastructure that enables collaboration across projects, organisations, and industries.

This infrastructure supports project governance, developer tooling, and day-to-day operations across Eclipse open source projects. While much of it runs quietly in the background, it plays a critical role in the health, security, and sustainability of those projects.

We are expanding the Software Development team with two new roles. Both positions involve contributing to the design, development, and operation of services that are widely used, security-sensitive, and expected to operate reliably at scale.

Software engineer: security and detection

One of the roles is a Software Engineer position with a focus on security and detection engineering, alongside general development and operations.

This role will work on Open VSX Registry, an open source registry for VS Code extensions operated by the Eclipse Foundation. As adoption grows, maintaining the integrity and trustworthiness of the registry requires continuous analysis, detection, and operational safeguards.

In this role, you will:

- Analyse suspicious or malicious extensions and related artefacts

- Develop, test, and maintain YARA rules to detect malicious or policy-violating content

- Design, implement and contribute improvements to backend services, including new features, abuse prevention, rate-limiting, and operational safeguards

This is hands-on work that combines backend development with practical security analysis. The outcome directly improves the reliability, integrity, and operation of services that are part of the developer tooling supply chain.

For more context on this work, see my recent post on strengthening supply-chain security in Open VSX.

To apply:

https://eclipsefoundation.applytojob.com/apply/eXFgacP5SJ/Software-Engineer

Software developer: open source project tooling and services

The second role is a Software Developer position focused on improving the tools and services that support Eclipse open source projects.

This work centres on maintaining and evolving systems that our open source projects and contributors rely on every day. It includes:

- Maintaining and modernising project-facing applications such as projects.eclipse.org, built with Drupal and PHP

- Developing Python tooling to automate internal processes and improve project metrics

- Improving services written in Java or JavaScript that support project governance workflows

As with the Software Engineer role, this position involves contributing to production services. The focus is on incremental improvement, reducing technical debt, and ensuring systems remain maintainable, secure, and reliable as they evolve.

To apply:

https://eclipsefoundation.applytojob.com/apply/mvaSS7T8Ox/Software-Developer

What we are looking for

Across both roles, we are looking for people who:

- Take a pragmatic approach to problem solving

- Are comfortable working in a remote, open source environment

- Value clear documentation and thoughtful communication

- Enjoy understanding how systems work and how to improve them over time

If you are interested in working on open source infrastructure with real users and real impact, we would be happy to hear from you.

February 03, 2026

The Eclipse Foundation and ECSO formalise cooperation through new Memorandum of Understanding

by Shanda Giacomoni at February 03, 2026 02:58 PM

The European Cyber Security Organisation (ECSO) and the Eclipse Foundation have formalised a Memorandum of Understanding (MoU), establishing a framework for close cooperation between the two organisations.

February 02, 2026

WoT Tooling: Code Generation and OpenAPI from Thing Models

February 02, 2026 12:00 AM

Eclipse Ditto’s W3C WoT (Web of Things) integration lets you reference Thing Models in Thing Definitions, generate Thing Descriptions, and create Thing skeletons at runtime. Alongside that, the ditto-wot-tooling project provides build-time and CLI tools to generate Kotlin code and OpenAPI specifications from the same WoT Thing Models.

This post gives an overview of the available tools, their configuration, and some best practices so the Ditto community can use them effectively.

Overview of ditto-wot-tooling

The ditto-wot-tooling repository hosts two main tools:

| Tool | Purpose |

|---|---|

| WoT Kotlin Generator | Maven plugin that downloads a WoT Thing Model (JSON-LD) via HTTP and generates Kotlin data classes and path helpers for type-safe use in your application. |

| WoT to OpenAPI Generator | Converts WoT Thing Models into OpenAPI 3.1.0 specifications that describe Ditto’s HTTP API for Things conforming to that model. Usable as CLI or library. |

Both tools consume Thing Models from a URL (e.g. a deployed model registry). They complement Ditto’s runtime WoT support: Ditto fetches Thing Models (TMs) to build skeletons and Thing Descriptions; the tooling uses the same TMs at build time or in CI to generate client code and API docs.

WoT Kotlin Generator Maven plugin

The WoT Kotlin Generator produces Kotlin code (data classes, builders, path DSL) from a single Thing Model URL. The generated code aligns with Ditto’s API and can be used to build merge commands, RQL filters, and path references in a type-safe way.

Why use generated models?

Using the Kotlin generator gives you a single source of truth: your backend models are derived directly from the WoT Thing Models, which act as the schema for your Things. Ditto supports WoT-based validation of Things and Features against the referenced Thing Model. If you maintain models by hand, they can drift from the WoT schema and become incompatible—leading to validation errors at runtime, failed updates, or subtle bugs. Generated models stay in sync with the Thing Model you point the plugin at, so the payloads you build are valid by construction. That makes development easier, safer, and more predictable: you get compile-time safety and alignment with Ditto’s validation instead of discovering mismatches only when Ditto rejects a request.

Maven setup

Add the common-models dependency (required by the generated code) and the plugin to your pom.xml:

<dependency>

<groupId>org.eclipse.ditto</groupId>

<artifactId>wot-kotlin-generator-common-models</artifactId>

<version>1.0.0</version>

</dependency>

<plugin>

<groupId>org.eclipse.ditto</groupId>

<artifactId>wot-kotlin-generator-maven-plugin</artifactId>

<version>1.0.0</version>

<executions>

<execution>

<id>code-generator-my-model</id>

<phase>generate-sources</phase>

<goals>

<goal>codegen</goal>

</goals>

<configuration>

<thingModelUrl>${modelBaseUrl}/my-domain/my-model-${my-model.version}.tm.jsonld</thingModelUrl>

<packageName>com.example.wot.model.mymodel</packageName>

<classNamingStrategy>ORIGINAL_THEN_COMPOUND</classNamingStrategy>

</configuration>

</execution>

</executions>

</plugin>

Full plugin configuration options

| Parameter | Type | Required | Default | Description |

|---|---|---|---|---|

thingModelUrl |

String | Yes | - | Full HTTP(S) URL of the WoT Thing Model (JSON-LD). The plugin downloads it at build time. |

packageName |

String | No | org.eclipse.ditto.wot.kotlin.generator.model |

Target package for generated Kotlin classes. |

outputDir |

String | No | target/generated-sources |

Directory where generated sources are written (Maven adds it as a source root). |

enumGenerationStrategy |

String | No | INLINE |

How to generate enums: INLINE (nested in the class that uses them) or SEPARATE_CLASS (standalone enum classes). |

classNamingStrategy |

String | No | COMPOUND_ALL |

How to name generated classes: COMPOUND_ALL (e.g. RoomAttributes, BatteryProperties) or ORIGINAL_THEN_COMPOUND (use schema title when possible, compound only on conflict). |

generateSuspendDsl |

boolean | No | false |

If true, generated DSL builder functions are suspend functions for Kotlin coroutines. |

Use Maven properties for the base URL and model version so you can switch environments and pin versions in one place:

<properties>

<modelBaseUrl>https://models.example.com</modelBaseUrl>

<my-model.version>1.0.0</my-model.version>

</properties>

Enum and class naming strategies

Enum generation

INLINE(default): Enums are nested inside the class that uses them (e.g.Thermostat.ThermostatStatus). Keeps the number of files lower and is convenient for simple enums.SEPARATE_CLASS: Enums are generated as standalone classes in separate files. Better for IDE navigation and reuse across multiple classes.

Class naming

COMPOUND_ALL(default): Class names always combine parent and child (e.g.SmartheatingThermostat,RoomAttributes). Guarantees unique names and makes hierarchy clear.ORIGINAL_THEN_COMPOUND: Uses the schematitlewhen there is no conflict (e.g.Thermostat); falls back to compound names (e.g.SmartheatingThermostat) when needed. Produces shorter, more readable names when possible.

DSL: regular vs suspend

By default, the plugin generates regular Kotlin DSL functions for building thing/feature/property objects. Set generateSuspendDsl=true to generate suspend DSL functions instead, so you can use them inside coroutines and call suspend code from within the DSL block.

Generated code structure

The plugin generates a package structure aligned with your Thing Model:

- Main thing class – root class for the thing (e.g.

FloorLamp,Device). attributes/– interfaces/classes for thing-level attributes (e.g.Location,Room).features/– one subpackage per feature with feature and property types (e.g.lamp/Lamp.kt,LampProperties.kt).- DSL functions – fluent builders (e.g.

floorLamp { ... },features { lamp { ... } }). - Path and RQL helpers – provided by the common-models dependency; the generated code uses them for type-safe paths and RQL expressions.

You must add the wot-kotlin-generator-common-models dependency to your project; the generated code extends interfaces and uses path builders from that artifact.

What the generator supports

The plugin follows the WoT Thing Model specification: it handles properties (read/write, various types), actions (with input/output schemas), events, and links (e.g. tm:extends, tm:submodel). Supported data types include primitives (string, number, integer, boolean), object, array, and custom types via $ref. Enums in the schema become Kotlin enums according to enumGenerationStrategy.

Best practices

- Pin model versions in

pom.xml(or a BOM) so builds are reproducible and you can upgrade TMs in a controlled way. - One execution per “logical” model: use a separate

<execution>for each Thing Model you need (e.g. device, room, building). Each execution has its ownthingModelUrlandpackageName. - Align with runtime definitions: use the same base URL and versioning as the Thing Definition URLs you send to Ditto, so the generated types match what Ditto expects.

- Add the common-models dependency: the generated code depends on

wot-kotlin-generator-common-modelsfor path building and Ditto RQL helpers; do not omit it.

Running the plugin from the command line

You can invoke the plugin directly without a full POM execution by passing parameters with -D:

mvn org.eclipse.ditto:wot-kotlin-generator-maven-plugin:codegen \

-DthingModelUrl=https://models.example.com/device-1.0.0.tm.jsonld \

-DpackageName=com.example.wot.model.device \

-DoutputDir=target/generated-sources \

-DenumGenerationStrategy=SEPARATE_CLASS \

-DclassNamingStrategy=ORIGINAL_THEN_COMPOUND

Optional: -DgenerateSuspendDsl=true for suspend DSL. Useful for one-off generation or scripts.

Path generation and type-safe RQL

The common-models dependency provides a path builder API that works with the generated classes. You get compile-time–safe paths and RQL expressions instead of string concatenation.

Path builder API

pathBuilder().from(start = SomeClass::property)– start a path from a property (e.g.Thing::features,Device::attributes)..add(NextClass::property)– append path segments (e.g.Features::thermostat,Attributes::location)..build()– finalize as a path string (e.g. for logging or custom use)..buildSearchProperty()– create a search property object for RQL comparisons (see below)..buildJsonPointer()– build a Ditto JSON Pointer (e.g. forMergeThing,DeleteAttribute). Useful when the generated model exposes astartPath(e.g.Location::startPath) for a nested type.

RQL combinators

Use these from DittoRql.Companion to combine conditions:

and(condition1, condition2, ...)– all conditions must hold.or(condition1, condition2, ...)– at least one condition must hold.not(condition)– negate a condition.

Each condition is often a search property expression (see below). Call .toString() on the result to get the RQL string for Ditto’s search API or conditional request headers.

Search property methods

After .buildSearchProperty() you can chain one of:

| Method | RQL | Example |

|---|---|---|

exists() |

property exists | .exists() |

eq(value) |

equals | .eq("THERMOSTAT") |

ne(value) |

not equal | .ne(0) |

gt(value) |

greater than | .gt(20.0) |

ge(value) |

greater or equal | .ge(timestamp) |

lt(value) |

less than | .lt(timestamp) |

le(value) |

less or equal | .le("2026-02-20T08:00:00Z") |

like(pattern) |

wildcard ? / * |

.like("room-*") |

ilike(pattern) |

case-insensitive like | .ilike("*sensor*") |

in(values) |

value in collection | .in(listOf("A", "B")) |

Example: conditional merge (RQL for Ditto headers)

Typical use is building a condition for Ditto’s conditional request header (e.g. for merge or delete).

Below, we require that the thing has a given attribute type and either no mountedOn or mountedOn less than or equal to a timestamp:

import com.example.wot.model.path.DittoRql.Companion.and

import com.example.wot.model.path.DittoRql.Companion.or

import com.example.wot.model.path.DittoRql.Companion.not

import com.example.wot.model.path.DittoRql.Companion.pathBuilder

// Condition: type eq "THERMOSTAT" AND

// (NOT exists(location/mountedOn) OR location/mountedOn <= eventDate)

val updateCondition = and(

pathBuilder().from(Device::attributes)

.add(Attributes::type)

.buildSearchProperty()

.eq("THERMOSTAT"),

or(

not(

pathBuilder().from(Device::attributes)

.add(Attributes::location)

.add(Location::mountedOn)

.buildSearchProperty()

.exists()

),

pathBuilder().from(Device::attributes)

.add(Attributes::location)

.add(Location::mountedOn)

.buildSearchProperty()

.le(eventDate)

)

).toString()

// Use in Ditto merge headers

val mergeCmd = MergeThing.withThing(

thingId,

thing,

null,

null,

dittoHeaders.toBuilder().condition(updateCondition).build()

)

Example: JSON pointer for DeleteAttribute

For commands that take a JSON pointer (e.g. delete a nested attribute), use the generated startPath and buildJsonPointer():

val deleteLocationAttribute = DeleteAttribute.of(

thingId,

pathBuilder().from(Location::startPath).buildJsonPointer(),

dittoHeaders

)

Example: RQL for search

The same RQL string can be passed to Ditto’s search API (e.g. GET /search/things?filter=...):

val filter = pathBuilder().from(Device::attributes)

.add(Attributes::location)

.buildSearchProperty()

.exists()

dittoClient.searchThings(filter.toString(), ...)

The exact property names and types (Device, Attributes, Location, etc.) come from your Thing Model; the generator produces the matching classes and path helpers so that paths and RQL stay in sync with the model.

WoT to OpenAPI Generator

The WoT to OpenAPI Generator turns a WoT Thing Model into an OpenAPI 3.1.0 YAML (or JSON) that describes Ditto’s HTTP API for Things that follow that model: thing and attribute paths, feature properties, and actions (e.g. inbox messages).

Benefits for frontends and API consumers

The generated OpenAPI spec is a standard, tool-friendly contract. Frontend teams can feed it into code generators (e.g. OpenAPI Generator, Orval, or the OpenAPI TypeScript/JavaScript generators) to generate TypeScript or JavaScript models, typed HTTP client methods, and request/response types for thing, attribute, feature, and action endpoints. That keeps the UI in sync with the backend: API changes are reflected in the spec, and regenerating client code updates types and calls in one step. You get autocomplete, fewer manual typos, and consistent request shapes. The same spec can drive API documentation (e.g. Swagger UI or Redoc), integration tests, or other clients (mobile, scripts). One Thing Model (TM) thus drives both backend Kotlin models and frontend API usage from a single source of truth.

Usage

The generator is available as a CLI (run with java -jar) or as a library. You can get the JAR from Maven Central or build from source.

Command-line:

java -jar wot-to-openapi-generator-1.0.0.jar <model-base-url> <model-name> <model-version> [ditto-base-url]

| Argument | Description |

|---|---|

model-base-url |

Base URL where the TM is served (e.g. https://models.example.com). |

model-name |

Model name (e.g. dimmable-colored-lamp). The generator will load {model-base-url}/{model-name}-{model-version}.tm.jsonld. |

model-version |

Version (e.g. 1.0.0). |

ditto-base-url |

(Optional) Base URL of the Ditto API (e.g. https://ditto.example.com/api/2/things). Used in the generated servers section. |

Example:

java -jar wot-to-openapi-generator-1.0.0.jar \

https://eclipse-ditto.github.io/ditto-examples/wot/models/ \

dimmable-colored-lamp \

1.0.0 \

https://ditto.example.com/api/2/things

Generated specs are written under a generated/ directory (path may vary by version). The output includes thing-level and attribute-level endpoints, feature properties, and action endpoints with request/response schemas derived from the TM.

Best practices

- Run in CI: generate OpenAPI from your main Thing Models in your pipeline and publish the artifacts (e.g. to a docs site or S3) so API consumers always see up-to-date specs.

- Use the same model base URL and versions as in your Kotlin generator and Ditto Thing definitions, so docs and code stay in sync.

- Set

ditto-base-urlwhen you want the generatedserverssection to point at your Ditto instance; otherwise the generator may use a default.

Summary

- ditto-wot-tooling provides the WoT Kotlin Generator (Maven plugin) and the WoT to OpenAPI Generator (CLI/library). Both take a WoT Thing Model URL and produce artifacts you can use at build time or in CI.

- WoT Kotlin Generator: configure

thingModelUrl,packageName,outputDir,enumGenerationStrategy(INLINE/SEPARATE_CLASS),classNamingStrategy(COMPOUND_ALL/ORIGINAL_THEN_COMPOUND), and optionallygenerateSuspendDsl; pin model versions; add one execution per model; depend onwot-kotlin-generator-common-models. The generated code plus common-models give you type-safe path building (e.g.pathBuilder().from().add().buildSearchProperty()) and RQL combinators (and,or,not) and comparison methods (exists,eq,gt,lt,like,in, etc.) for Ditto search and conditional requests. - WoT to OpenAPI Generator: run with model base URL, model name, and version (and optionally Ditto base URL); integrate into CI to keep API docs aligned with your Thing Models.

For more on WoT in Ditto (definitions, skeleton generation, Thing Descriptions), see the WoT integration documentation and the WoT integration blog post. For the tools themselves, go to eclipse-ditto/ditto-wot-tooling.

Feedback?

Please get in touch if you have feedback or questions about the WoT tooling.

–

The Eclipse Ditto team

January 28, 2026

Strengthening supply-chain security in Open VSX

January 28, 2026 11:30 AM

The Open VSX Registry is core infrastructure in the developer supply chain, delivering extensions developers download, install, and rely on every day. As the ecosystem grows, maintaining that trust matters more than ever.

A quick note on terminology: Eclipse Open VSX is an open source project, and the Open VSX Registry is the hosted instance of that project, operated by the Eclipse Foundation. This post focuses primarily on security improvements being rolled out in the Open VSX Registry, while much of the underlying work is happening in the Open VSX project.

Up to now, the Open VSX Registry has relied primarily on post-publication response and investigation. When a bad extension is reported, we investigate and remove it. While this approach remains relevant and necessary, it does not scale as publication volume increases and threat models evolve.

To address this, we are taking a more proactive approach by adding security checks before extensions are published, rather than relying only on reports after the fact.

Why pre-publish security checks matter

Developer tooling ecosystems, including package registries and extension marketplaces, are a popular target, and we see the same types of issues repeatedly:

- Namespace impersonation designed to mislead users

- Secrets or credentials accidentally committed and published

- Malicious or misleading extensions

- Supply-chain attacks that spread quietly over time

Relying only on after-the-fact detection leaves a growing window of exposure. Pre-publish checks help narrow that window by catching the most obvious issues earlier. Similar pre-publication and monitoring approaches are increasingly standard across large extension marketplaces as developer tooling ecosystems mature.

How we’re approaching this work

To move faster and add specialized expertise, we are working alongside security consultants from Yeeth Security. These experts are helping us design and implement a new verification framework, while keeping overall direction, decision-making, and long-term stewardship firmly within the Open VSX project and the Eclipse Foundation as operators of the Open VSX Registry.

Most of this work is happening in the open. We are keeping a small set of security-sensitive details private to reduce the risk of abuse or circumvention.

What we’re building

Together, we’re introducing a new, extensible verification framework. Over time, it will enable Open VSX Registry to:

- Detect clear cases of extension name or namespace impersonation

- Flag accidentally published credentials or secrets

- Scan for known malicious patterns

- Quarantine suspicious uploads for review instead of publishing them immediately, with clear feedback provided to the publisher

If you want to follow along, the work is tracked here:

https://github.com/eclipse/openvsx/issues/1331

This framework is designed to grow with the ecosystem, so we can add new checks as threat models evolve.

A measured rollout

We’re approaching this effort with a focus on ecosystem health. The goal and intent is to raise the security floor, help publishers catch issues early, and keep the experience predictable and fair for good-faith publishers. Our current plan is to:

- Begin monitoring newly published extensions in February, without blocking publication

- Use this monitoring period to tune checks, reduce false positives, and improve feedback

- Move toward enforcement in March, once we’re confident the system behaves predictably and fairly

This staged rollout gives us room to get it right before it impacts publication flows.

What publishers and users should expect

Publishers may start seeing new messages when potential issues are detected. In most cases, these are meant to be helpful nudges, not roadblocks. We also want to thank the thousands of extension publishers who already act responsibly and help make the Open VSX Registry a trusted resource for the broader developer community. The goal is to:

- Catch accidental mistakes early

- Make expectations clearer

- Reduce the likelihood that risky content reaches users

Human review will remain part of the process, especially for edge cases. We will also continue to provide clear remediation guidance in our wikis and project documentation.

For users, the outcome is straightforward. Pre-publish checks reduce the likelihood that obviously malicious or unsafe extensions make it into the ecosystem, which increases confidence in the Open VSX Registry as shared infrastructure.

Security is ongoing work

Security isn’t a one-and-done project. It evolves alongside the ecosystem it protects. We see this work as ongoing, and we’ll continue to share what we’re doing, why we’re doing it, and what we learn along the way.

Looking ahead

Strengthening supply-chain security is a shared responsibility. As the Open VSX Registry continues to grow, investing in proactive safeguards is essential to protecting both publishers and users.

These changes are an important step forward, and part of a longer journey. We’ll keep iterating, learning from real-world use, and adapting as the ecosystem evolves. Community feedback plays a critical role in that process, and we encourage publishers and users to share their experiences as this work rolls out.

We would also like to thank Alpha-Omega for supporting this work, and for their broader support of the Eclipse Foundation’s security initiatives.

Our goal is simple: to keep the Open VSX Registry a resource developers can depend on with confidence.

Growing with the ecosystem

The security work outlined above is part of a broader effort to scale the Open VSX Registry responsibly as adoption continues to grow. That includes investing not just in tooling and processes, but in the people doing the work.

We’re actively expanding the Open VSX team, including roles focused on security, platform engineering, and ecosystem stewardship. If you’re interested in helping build and protect critical open source infrastructure, you can find our current openings on the Eclipse Foundation careers page!

January 27, 2026

Invisible Blockers for AI Coding: Why Developers Feel Useless

by Jonas, Maximilian & Philip at January 27, 2026 12:00 AM

During a recent workshop, a developer pulled one of us aside during a break. “I used to love my job,” he said. “Now I feel tired and useless.” He wasn’t burned out from overwork. He didn’t have bad …

The post Invisible Blockers for AI Coding: Why Developers Feel Useless appeared first on EclipseSource.

January 26, 2026

The OpenHW Foundation unveils the first industry-ready RISC-V ecosystem to advance European digital sovereignty

by Natalia Loungou at January 26, 2026 12:00 PM

BRUSSELS - January 26, 2026 - The OpenHW Foundation, a global leader in developing RISC-V core IP, today announced the launch of the Unified RISC-V IP Access Platform (UAP) as part of the TRISTAN project. The UAP represents Europe’s first comprehensive collection of industry-ready RISC-V components. As interest in digital sovereignty continues to grow, particularly within the European Union, the UAP makes it significantly easier for technology organisations to innovate based on an open, sovereign foundation.

“The Unified RISC-V IP Access Platform is critical to supporting technological sovereignty in Europe, and the OpenHW Foundation is committed to developing it as a sustainable, interoperable, and community-driven resource for the broader RISC-V ecosystem,” said Florian Wohlrab, Head of OpenHW Foundation. “Open source collaboration is essential to maintaining a competitive playing field, and by working together, we can go further, faster.”

RISC-V is an open standard instruction set architecture used to develop custom processors for a wide range of applications, including embedded systems and consumer devices. The European Union considers RISC-V critical to achieving technological sovereignty and driving greater competition in the global semiconductor market, valued at more than USD 700 billion. While the EU currently accounts for roughly 10% of this market, the 2023 European Chips Act aims to double that share to 20% by 2030.

The UAP lowers key barriers to entry for EU-based organisations by providing a single, unified source of verified, industry-ready RISC-V IP. It consolidates both hardware and software components and provides clear visibility into each item’s maturity, usability, licensing, and integration workflow. This marks the first time such a comprehensive collection of verified EU RISC-V artifacts has been assembled, much of it fully open source, representing an important step toward European digital sovereignty. The platform also ensures that IP produced by multiple EU research projects is captured, maintained, and made accessible for driving sustainable, long-term collaboration.

To support its digital sovereignty goals, the European Union has invested heavily in cutting-edge RISC-V research and development through the Chips Joint Undertaking (CHIPS JU), which funds projects such as TRISTAN, of which the OpenHW Foundation is a member.

Launched in 2023, TRISTAN aims to industrialise RISC-V cores by moving them from research environments into real-world applications and creating a sustainable open source ecosystem to drive competitiveness and enable more agile innovation. The TRISTAN consortium includes 46 partners spanning large enterprises, SMEs, research organisations, universities, and industry associations connected to RISC-V. Together, they combine expertise and resources from across Europe and beyond to drive innovation and collaboration.

Originating within the TRISTAN project, the UAP brings together RISC-V IP under various licenses from TRISTAN and other Chips JU projects, providing end users with a centralized place to find verified, industry-ready RISC-V components.

The UAP acts as a unified access page, linking to repositories hosted on the OpenHW Foundation GitHub, automatically mirrored to a European-hosted GitLab instance and to other public forges where appropriate, or maintained as private assets. It provides documentation, status information, and an evolving structure designed to better support integration across toolchains, accelerators, and infrastructure components.

Oversight of the UAP is provided by the Virtual Repository Task Group, which includes representatives from TRISTAN and ISOLDE. Additional Chips JU and RISC-V-related EU projects, including Rebecca, RIGOLETTO, and Scale4Edge, are also joining the initiative. As more EU projects open source their IP, they will be added as maintainers so that each project can curate its own catalogue and ensure continuity beyond the end of TRISTAN.

“The Unified RISC-V IP Access Platform is one of the most important initiatives to come from the TRISTAN project, ensuring that the contributions from consortium partners continue to have an impact on the European stage long past the end of our funding,” said Rob Wullems, NXP Semiconductors GmbH, TRISTAN Project Lead. “Critically, it enables us to build and nurture a community around European RISC-V that will drive ongoing innovation and collaboration that supports European technological sovereignty.”

About The OpenHW Foundation

OpenHW Foundation, an industry collaboration with the Eclipse Foundation, is a global non-profit organisation dedicated to developing, verifying, and delivering high-quality, open source RISC-V processor cores and related IP for commercial and industrial applications.

With its extensive network of members and partners, the OpenHW Foundation is driving the advancement of open source RISC-V processor technology across cloud, mobile, IoT, AI, automotive, HPC, and other domains. Through its CORE-V Task Group, the organisation ensures industry-aligned, high-quality development, supporting cutting-edge SoC production worldwide.

OpenHW Foundation is supported by leading innovators such as Thales, CEA List, Siemens, Red Hat, ETH Zurich, Beijing Institute of Open Source Chip, and many more.

About TRISTAN

The TRISTAN project, nr. 101095947 is supported by Chips Joint Undertaking (CHIPS-JU) and its members Austria, Belgium, Bulgaria, Croatia, Cyprus, Czechia, Germany, Denmark, Estonia, Greece, Spain, Finland, France, Hungary, Ireland, Iceland, Italy, Lithuania, Luxembourg, Latvia, Malta, Netherlands, Norway, Poland, Portugal, Romania, Sweden, Slovenia, Slovakia, Turkey.

Learn more at tristan-project.eu

About the Eclipse Foundation

The Eclipse Foundation provides a global community of individuals and organisations with a vendor-neutral, business-friendly environment for open source collaboration and innovation. We host Adoptium, the Eclipse IDE, Jakarta EE, Open VSX, Software Defined Vehicle, and more than 400 high-impact open source projects. Headquartered in Brussels, Belgium, we are an international non-profit association supported by over 300 members. Our events, including Open Community Experience (OCX), bring together developers, industry leaders, and researchers from around the world. To learn more, follow us on X and LinkedIn, or visit eclipse.org.

Media contacts:

Schwartz Public Relations (Germany)

Julia Rauch/Marita Bäumer

Sendlinger Straße 42A

80331 Munich

EclipseFoundation@schwartzpr.de

+49 (89) 211 871 -70/ -62

514 Media Ltd (France, Italy, Spain)

Benoit Simoneau

M: +44 (0) 7891 920 370

Nichols Communications (Global Press Contact)

Jay Nichols

+1 408-772-1551

January 22, 2026

European Initiative for Data Sovereignty Released a Trust Framework

by Ben Linders at January 22, 2026 11:47 AM

The Danube release of the Gaia-X trust framework provides mechanisms for the automation of compliance and supports interoperability across sectors and geographies to ensure trusted data transactions and service interactions. The Gaia-X Summit 2025 hosted facilitated discussions on AI and data sovereignty, and presented data space solutions that support innovation across Europe and beyond.

By Ben LindersMCP and Context Overload: Why More Tools Make Your AI Agent Worse

by Jonas, Maximilian & Philip at January 22, 2026 12:00 AM

The Model Context Protocol (MCP) is one of the most successful innovations in AI tooling. It lets agents connect to external systems — GitHub, databases, browsers, IDEs — through a standardized …

The post MCP and Context Overload: Why More Tools Make Your AI Agent Worse appeared first on EclipseSource.

January 21, 2026

This is not the Vibe Coding Book you are Looking for

by Donald Raab at January 21, 2026 06:12 AM

If you love programming, the book you are looking for may be in here.

Photo by Christian Panta on Unsplash

Photo by Christian Panta on UnsplashThe end of programming as I knew it

In February 2000, I switched gears from programming in Smalltalk, to programming in Java. During my first five years of programming in Java, I suffered repetitive eye strain syndrome reading and writing duplicate for loops. This was the end of programming for me. Java was an absolute waste of my time, productivity, and ultimately, my creativity. The Java language was so pedestrian and duplicative in 2004, that I refused to accept it and decided to do something productive about it. I started building a Java collections library which would eventually become known as the open source Eclipse Collections library today.

I could not have predicted this future for myself, but this is the future that happened to and for me. I had learned something vitally important while programming in Smalltalk professionally that I was unwilling to abandon — EVERY modern programming language should have support for concise lambda expressions and feature rich collections. If either of these features are missing in a programming language, then the language is not worth programming in.

Note: I find it sadly ironic that many of the curly-brace-language crowd that said for years that Smalltalk syntax was just too weird to learn, now blindly embrace vibe coding in English. The irony? Smalltalk reads like English. Statements even end in a period, instead of a semi-colon, like a sentence. Smalltalk is still the best object-oriented programming language available, and most developers will never understand why. Read the following blog with a nice cup of soothing tea, and wonder what secrets this long dismissed language might be hiding from you.

A little Smalltalk for the soul

The beginning of the end of the beginning

Java was the beginning of the end of programming for me. It was also a new beginning. I have renewed my love of programming with Java. But, how? By programming in Java, and teaching programming in Java. I like to joke that I program in Smalltalk in every language I program in. Well, maybe it’s not really a joke. Eclipse Collections was the beginning of Smalltalk-inspired collection productivity in Java. It was also the the end of the pedestrian programming language I suffered through in my early years of Java programming. Java was reborn as something new for me, and for all those that I worked with. But there was something missing in Java. I knew what it was, but I had to do something I wouldn’t have thought was possible. I’d have to help the Java language evolve.

I wrote the following story of my ten year quest to get concise lambda expression support in Java. TL;DR, there is a happy conclusion of Java finally getting support for concise lambda expressions in Java 8.

My ten year quest for concise lambda expressions in Java

By the time I wrote this blog, I knew I wanted to write a book about the Eclipse Collections library, but I hadn’t quite figured out how I would write a book about Eclipse Collections. I didn’t want to write a book about lambdas, data structures, and algorithms in Java that I wouldn’t want to read. This seemed like an impossible task for a long time. That is until I figured out how to approach the problem using information chunking.

I love programming, and open source

Programming brings me joy. Teaching others to program brings me even more joy. It has saddened me for twenty five years to see folks abandon their love of programming because programming just became too hard and painful. Programming does not have to be this way. I wanted to write a book that would bring back the joy of programming I experienced as a child. I wrote a blog about the joy of programming a few years ago.

I see programming through the eyes of a child who learned BASIC in the early 1980s. The same child grew up to love the creative power that Smalltalk showed him in the 1990s.

I have spent twenty five years showing Java developers what is possible, and I will continue to do so, for as long as there are developers out there willing to learn. I solved many difficult problems in Java while working at Goldman Sachs. In January 2012, we open sourced GS Collections on the Goldman Sachs GitHub account. Open sourcing GS Collections made it possible for me and others to show the entire world of Java developers what we were all missing for so many years. While it made it possible, it didn’t make it easy. There are millions of Java developers and a lot of inertia to overcome to get them all to take a look to find out what they are missing. I hope the book I wrote will help over time.

I’ve been sharing my joy of programming with other developers in open source now for fourteen years. Ten years have passed with maintaining the library known as Eclipse Collections at the Eclipse Foundation.

Here’s a link to a presentation that I gave to the Java Community Process Executive Committee on GS Collections in May 2014. After I gave this talk, the idea of moving GS Collections to the Eclipse Foundation was born. The slides from this talk have been available since May 2014, but I don’t know how many developers who go regularly reading the meeting minutes of long past meetings of the JCP Executive Committee.

Eclipse Collections has been available in open source for over a decade now. Anyone can contribute to Eclipse Collections at the Eclipse Foundation, so long as they sign the Eclipse Contributor Agreement. This was the primary problem that Eclipse Collections solved over GS Collections. GS Collections was “free as in beer.” Eclipse Collections is “free as in speech.”

Continuing my journey to improve Java coding

Not many developers get to work on software they believe in for twenty-two years. I am fortunate to be one of them. I finished writing and published my first Java programming book in March 2025, which was twenty-one years after I began my journey working on the Java library that would eventually become known as Eclipse Collections. This was the story I shared before publishing.

My Twenty-one Year Journey to Write and Publish My First Book

If you are not using Eclipse Collections, and you believe that the Java code you write today is the best code you could possibly write in Java, then I would encourage you to read this blog. It might help open your eyes to new possibilities.

Refactoring to Eclipse Collections with Java 25 at the dev2next Conference

After I gave this talk, Craig Motlin began sharing OpenRewrite recipes in open source to automate conversions to Eclipse Collections. The recipes hosted by the open source Liftwizard project are located at the link below.

liftwizard/liftwizard-utility/liftwizard-rewrite at main · liftwizard/liftwizard

While writing “Eclipse Collections Categorically: Level up your programming game”, I discovered and shared a way for all Java developers to reorganize their code so that any feature-rich APIs are more easily digestible, by grouping methods into method categories. This became the basis for how I organized my book, and is why the title is “Eclipse Collections Categorically.” Stealing my own thunder, I shared the discovery of how to simulate method categories in Java for free in a blog for all Java developers to benefit from. It may not come as a surprise that I learned this categorization approach from Smalltalk over thirty years ago.

Grouping Java methods using custom code folding regions with IntelliJ

If you don’t believe that Smalltalk could still be having an impact on the productivity potential for Java developers thirty years later, then read the blog above. One of the first things you will see is a Smalltalk Class browser with methods organized into method categories. I have shared my discovery with some of the developers at Oracle who work on the Core JDK team. I have suggested that having method categories as a standard feature in Javadoc would be amazing progress for this now thirty year old programming language. Don’t take my word for it though. Watch the following “Ask the Java Architects” video from Devoxx Belgium 2024. Thanks to Stuart Marks for the shout out. 🙏

https://medium.com/media/cac001dda3f6c658492bbb3c0542557b/hrefThe book: “Eclipse Collections Categorically”

Finally the book! The book, Eclipse Collections Categorically: Level up your programming game, has been available in print and digital versions since March 2025. You can find out more about the available versions of the book (with links to reading samples) at the link below. I encourage you to check out the reading samples before considering buying.

I don’t believe Generative AI can teach you the lessons in this book. I sincerely doubt that any Generative AI solution out there will tell you there is a better way to write and organize Java collections code. This book introduced the idea of adding method categories to the Java language. I have shown how to simulate method categories in IntelliJ and other IDEs by leveraging Custom Code Folding Regions. This is a short-term substitute while we wait for proper method category support in Java.

Generative AI has its place, and it’s not in here

While the whole world was focused on leapfrogging themselves learning new and shiny Generative AI tools, I was writing a book by hand the old fashioned way, minus pen and paper or a typewriter. I used IntelliJ IDEA and AsciiDoc for the writing part. For the publishing I used AsciiDoctor and AsciiDoctorJ. Using AsciiDoctorJ, I was able to implement my publishing pipelines using good old Java, with a touch of Eclipse Collections. I mean, why would I possibly set out to write a book about Eclipse Collections and not actually use Eclipse Collections. That would be so unlike me.

I worked on the cover with my sister who is a designer. Credit to her for making the joy of programming and method categories come alive on the cover.

The only thing you will find in this book is one developers love of programming in print form.

Eclipse Collections Categorically code samples

There is a repo with code samples from the book available at the following link. I will be adding more samples over time. There is much more than these code samples in the book, but if you want to experiment with them on your own, then I hope they bring you some measure of joy.

GitHub - sensiblesymmetry/ec-categorically: Resources for Eclipse Collections Categorically book

The Joy of Smalltalk and Method Categories

Here’s a teaser from my book. If you’re not familiar with Smalltalk, Smalltalk collections, and where the idea of method categories comes from, these two pages from the appendix of my book should be a quick and fun read.

An appendix dedicated to Smalltalk Collecctions in Eclipse Collections Categorically

An appendix dedicated to Smalltalk Collecctions in Eclipse Collections CategoricallyThank you for taking the time to read